TMC - Tanzu Mission Control

Consistent K8s security and operations across Teams and Clouds

In this Blogpost we will use a Tanzu Kubernetes Grid Cluster deployed on VMware Cloud on AWS and integrate it with Tanzu Mission Control. We will make use of the Identity and Access Management, Compliance Policy Enigne and integrated Backup Capabilties of Tanzu Mission Control.

The Tanzu Portfolio

The VMware Tanzu portfolio is a set of products which has the goal to modernize your infrastructure and applications in order to deliver better software to production - fast, secure and continuously. In my previous Blogposts I focussed on “TKG+” which was now transformed to the “Tanzu Standard” offering.

Tanzu Standard includes:

- Tanzu Kubernetes Grid (Short TKG)

- Tanzu Mission Control

- Contour as a Ingress controller to provide L7 Load-Balancing

- The Harbor Container Registry

- Prometheus and Grafana for Observability

- Fluent Bit for log forwarding

You can have a look at the Tanzu Standard Solutions brief to get a better overview regarding Tanzu Standard.

I am going to focus on Tanzu Mission Control and it´s capabilities in this blogpost.

Tanzu Mission Control

Tanzu Mission Control - short “TMC” allows to manage all your K8s clusters, regardless where they run and how they are deployed. It is a centralized management platform where you can secure and operate your Kubernetes infrastructure across multiple teams and even Clouds. Customers choosing to leverage the “Tanzu Standard” offering with their VMware Cloud deployment are eligible to use Tanzu Mission Control as well.

Tanzu Mission Control addresses a lot of challenges which most companies face when running Kubernetes at scale. We´re going to focus on the following in this Blogpost:

- A streamlined Identitiy and Access Management across Clusters in various Clouds

- Enforcing Compliance and Security Policies

- Backup of your Applications running in Kubernetes

Thinking of those challenges Tanzu Mission Control supports the infrastructure and the development teams. It provides independence to consume Infrastructure for development teams via API which is a huge benefit and for sure one of the reasons why the Cloud is that successful.

Moving from an ticket-driven approach to provide infrastructure to a completely self service approach where infrastructure can be consumed on-demand and via API really empowers development teams to increase the speed to build and ship their applications.

On the other hand Tanzu Mission Control provides the necessary consistency for operators and SREs to manage, lifecycle and secure the various Kubernetes Clusters. API driven sounds great but usually it get´s really challenging when dealing with various Cloud Providers. VMware Cloud and TMC ensures a consistent operator experience. Operators can attach upstream conformant Kubernetes Clusters from any Cloud Provider and leverage the capabilities of TMC to manage and secure those clusters. This way it is possible to secure and support Clusters which have been provisioned in the past by various teams.

Attaching our Cluster

As a first step we need to attach our already created TKG Cluster running in VMware Cloud on AWS to TMC. With a future version of TMC coming soon it will be possible to directly create new TKG Clusters from TMC in VMConAWS. For the time being we created the cluster “tkg-wl-03” with the tkg-cli and we are going to attach it to Tanzu Mission Control. If you want to know more about how to deploy TKG Clusters on VMware Cloud please read this previous post.

Let´s talk quickly about the concepts of handling resources in Tanzu Mission Control. The construct of Cluster Groups helps you to group your Kubernetes Clusters. Each Cluster you attach or create belongs to a Cluster Group.

Workspaces allow you to group Namespaces of different Clusters and assign them to logical groups. A example would be to align them with environments or projects. You can add existing Namespaces to a Workspace or create new Namespaces from TMC.

I have created the Cluster-Group “prod” and a Workspace called “prod” which is the intended location for our production K8s Clusters.

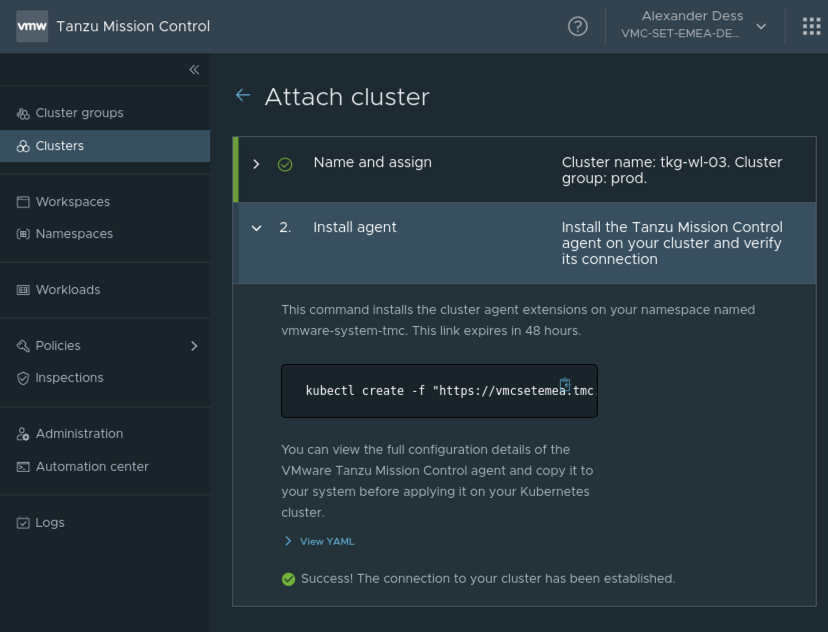

Let´s attach our existing Cluster to TMC:

All we need to do is to run the single-line installer we got presented from the UI. Let´s run the TMC-Installer to attach our TKG Cluster:

kubectl create -f "https://vmcsetemea.tmc.cloud.vmware.com/installer?id=XXXXX=attach"

namespace/vmware-system-tmc created

configmap/stack-config created

secret/tmc-access-secret created

....

We can verify in the UI the Cluster has been successfully attached and a connection is established.

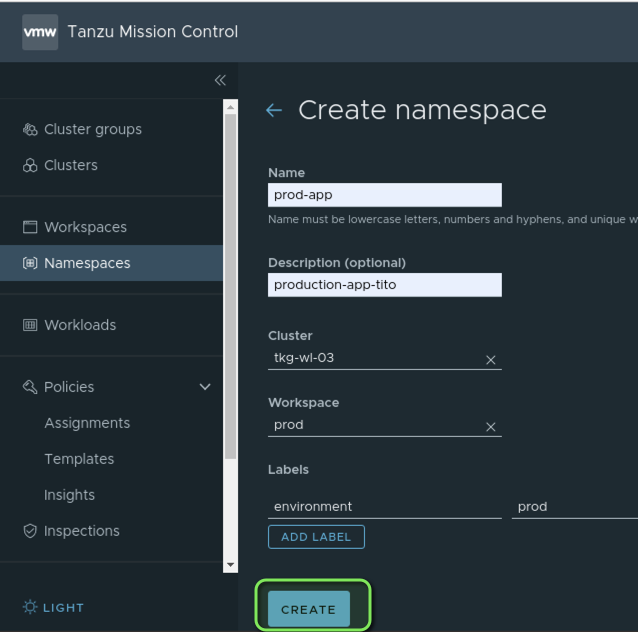

Create a new Namespace for our prod-app

Now that we´ve attached the Cluster successfully to Tanzu Mission Control we can make use of TMC to manage the Cluster. Next we´re going to create a Namespace called “prod-app” which is intended for the deployment of our application. We assign this namespace to our Workspace called “prod”.

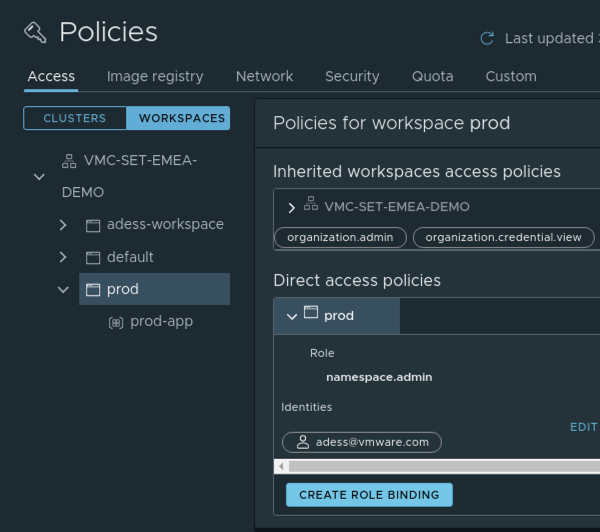

Give Access to our Developers

We´ve successfully attached our Cluster, created a new Namespace and assigned it to the “prod” Workspace. Next we´re going to create an access policy which will allow our developers to access all the Namespaces which are assigned to this Workspace but deny them to change the policies applied to it.

Once created our setting will be applied to all Namespaces attached to the Workspace “prod”. We want them to be able to work with all privileges while ensuring that the policies we´ve set are enforced. Therefore we assign the right “cluster.edit” which gives admin privileges without allowing to change the policies which we will set later on.

The screenshot shows the assignement to one “developer” - “adess@vmware.com”:

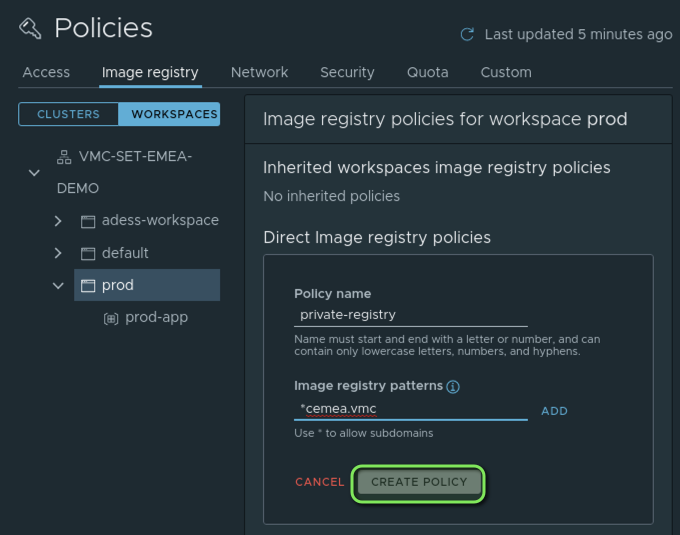

Container Registry Policies

We want that only verified Images, stored in our private Harbor container registry can be deployed on our “prod” Workspace. Therefore we can leverage the tab “Image registry” to create such a policy.

You can add multiple registry patterns if you wish. I am going to allow all registries located in my domain “*cemea.vmc”. My private Harbor registry FQDN is “harbor.cemea.vmc”.

Let´s create a simple deployment to verify if our private registry is working and if the policy is enforced.

We will create the following simple deployment:

$ cat deploy-from-private-registry.yaml

apiVersion: apps/v1

kind: Deployment

......

......

spec:

containers:

- name: tito

image: harbor.cemea.vmc/library/tito-fe:v1

ports:

- containerPort: 80

Apply the deployment to our Cluster:

$ kubectl apply -f deploy-from-private-registry.yaml

Verify if our pods are running:

$ k get po -n prod-app

NAME READY STATUS RESTARTS AGE

tito-fe-67b8dfb75d-gpnpt 1/1 Running 0 14s

tito-fe-67b8dfb75d-q72bg 1/1 Running 0 14s

tito-fe-67b8dfb75d-qc4gp 1/1 Running 0 14s

Woohooo - our private container registry is working properly and the container images have been pulled from it.

The Image Pull Policy

Next step is to verify if our Image policy is working correctly and if we are denied to deploy an application hosted on a public registry - for example the docker hub.

I have modified the deployment file to point to the same image stored on docker-hub:

$ cat deploy-docker-hub.yaml

apiVersion: apps/v1

kind: Deployment

......

......

spec:

containers:

- name: tito

image: adess/vmc-demo-k8s

ports:

- containerPort: 80

Let´s apply this deployment:

$ kubectl apply -f deploy-docker-hub.yaml

deployment.apps/tito-fe created

Hmmm - seems our deployment was created but let´s inspect what happens in the backround:

$ kubectl get po -n prod-app

No resources found in prod-app namespace.

Let´s inspect our deployment to understand what´s happening:

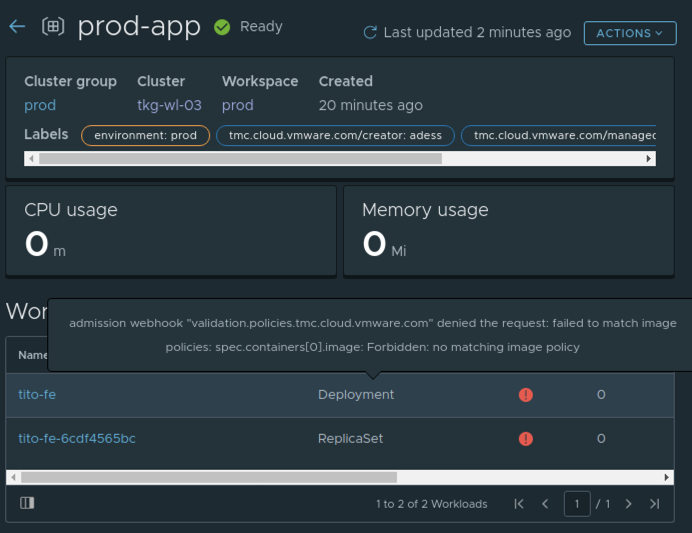

k describe replicaset -n prod-app

..."validation.policies.tmc.cloud.vmware.com" denied the request: **failed to match image policies:** spec.containers[0].image: Forbidden: no matching image policy

We see that NO pods have been created due to the image policy and we´re getting a clear error -“Forbidden: no matching image policy”.

We verified that our policy we applied on the Workspace is working and forbids the container image pull from the public container registry. We can verify this in the TMC UI as well:

If and what image policy you´re going to use is up to you. It defenetely makes sense to leverage this functionality for example for production workloads in order to ensure only secure, scanned images are deployed.

Backup Application data from your K8s Clusters

An important, often underestimated topic is the backup of your data which is stored on your K8s Clusters. Most of us having for sure heard the phrase " containers are stateless by default". There are indeed stateless applications. A web search engine like the big G** for example where you type your question and hit enter. When you´re interrupted at any time or close the window accidentally you just start over. It´s one request and you´re getting one answer.

Your Mail Application, Online Banking or our Tito App are stateful applications. Requests are handled in the context of previous transactions and state needs to be persisted. The majority of applications today are stateful applications. Kubernetes provides persistent Storage to applications via Persistent Volume Claims - short PVC. Our Tito Application for example stores it´s requests in a MySQL database which is backed by a Persistent Volume leveraging our vSAN Datastore in VMC:

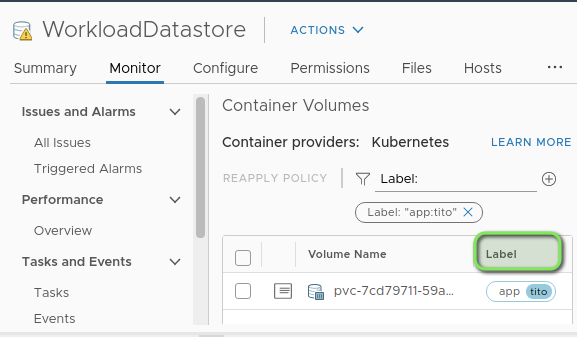

Let´s show this Persistent Volume in K8s and vSphere:

$ kubectl get pvc -A

NAMESPACE NAME STATUS VOLUME

prod-app mysql-pv-claim Bound pvc-7cd79711-59ad-49d2-8e9d-e4c8ceb6fc6f

$ kubectl describe pvc -A | grep -i labels

Labels: app=tito

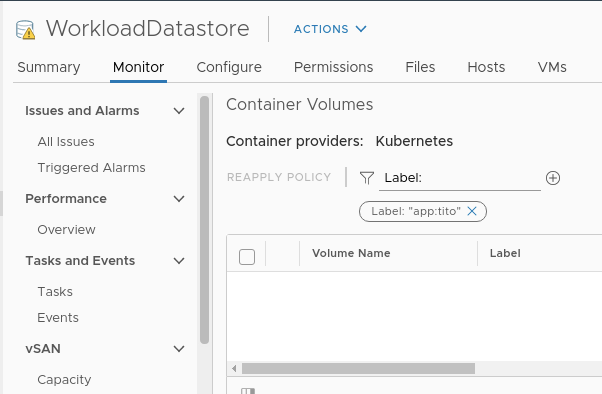

We´ve applied a label to the Persistent Volume Claim and therefore we can easily locate it in the vSphere UI. It´s using the “vSAN default Storage Class” as defined in the K8s Storage Class. The great thing here is that you can leverage vSAN Storage policies by creating them in the vSphere UI and using them directly from your K8s Clusters. Therefore you could have a development storage policy with no redundancy to save space and a production policy which ensures the site replication of your data.

Filter the Persistent Volume created in K8s by the label:

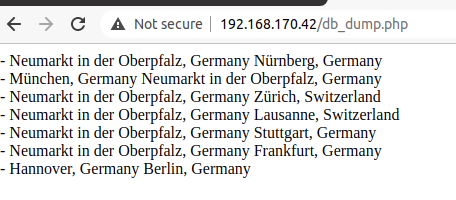

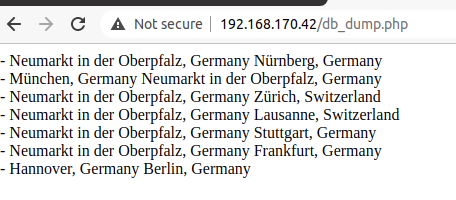

Our app which you can try at http://tito.alexdess.cloud/ has a “db-dump” function included which will show you the current database entries. Currently our database has this entries:

Next we´re going to backup our Namespace leveraging Tanzu Mission Control to a S3-Bucket which is located in our connected VPC.

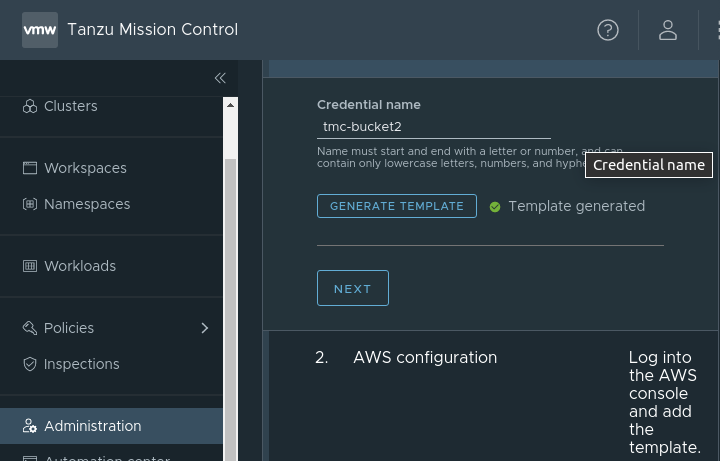

In the “Aministration” tab of Tanzu Mission Control you need to create the Data Protection Credentials which will be used by Tanzu Mission control and Velero to backup your data to the corresponding S3 bucket.

Let´s create a Data Protection Credential in Tanzu Mission Control

Download and execute the Cloud-Formation Template in your AWS account. - That´s it simple, fast, failproof and straight forward!

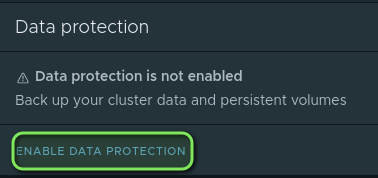

Next step is to activate the “Data Protection” feature for our paticular Cluster which we want to protect. You can do this by navigating back to “Clusters” and “Data Protection”.

When we activate the Data Protection Tanzu Mission Control creates a seperate Namespace for Velero which is the Backup tool we´re using in the backend to do the actual backups to our S3 Bucket. The deployment and configuration happens automatically. All you will notice is a new namespace with velero pods running on your cluster:

$ k get po -n velero

NAME READY STATUS RESTARTS AGE

restic-77gm5 1/1 Running 0 37s

restic-c6q8t 1/1 Running 0 36s

restic-vxwgq 1/1 Running 0 35s

velero-5884ddd89f-8br6c 1/1 Running 0 47s

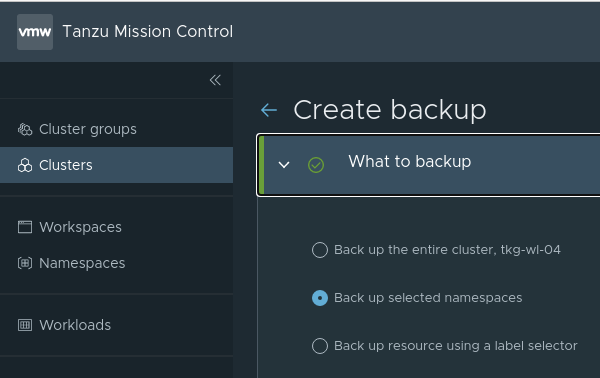

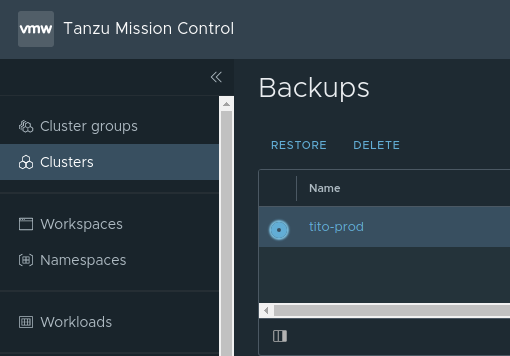

Let´s create a backup of our tito prod application from the TMC-UI:

We select our Cluster where we want to do the backup and the type of the backup “eg - selected Namespaces”:

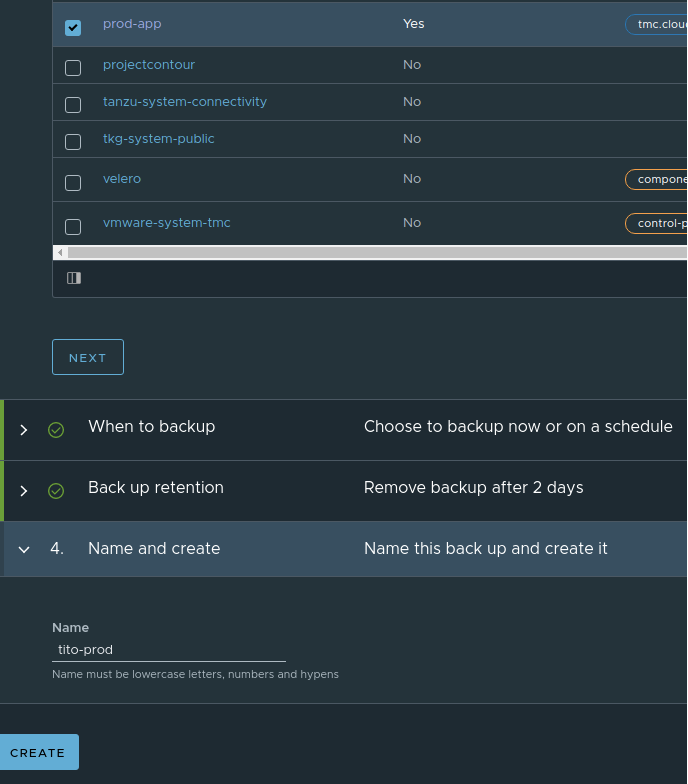

Select the Namespace we want to backup. In our case it´s the “prod-app” Namespace:

Select the Namespace we want to backup. In our case it´s the “prod-app” Namespace:

As you saw above you are able to create a backup for the entire Cluster or select the namespaces which you want to backup.

Now we´re going to delete our whole namespace on the K8s Cluster containing our application and all the data:

k delete ns prod-app

namespace "prod-app" deleted

All resources have been deleted including our Persistent Volume which was viewable on the vSAN Datastore before:

Let´s try to restore our application to the latest stage by selecting our backup and klick “RESTORE”.

Immediately Tanzu Mission Control starts the restore process via Velero and the Namespace including all the resources is created again on our Cluster:

$ k get po -n prod-app

NAME READY STATUS RESTARTS AGE

mysql-7fc6d65488-l2n9t 1/1 Running 0 17s

titofe-5f457dd8d8-2dlqj 1/1 Running 0 17s

titofe-5f457dd8d8-fqfdd 1/1 Running 0 17s

titofe-5f457dd8d8-hcsbm 1/1 Running 0 17s

titofe-5f457dd8d8-z2mrs 1/1 Running 0 17s

tkg@lx-tkg004:~/vmworld2020/tito-prod$ k get pvc -n prod-app

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

mysql-pv-claim Bound pvc-4e5e10af-ebf0-4a82-b669-a78836fc84e6 20Gi RWO default 24s

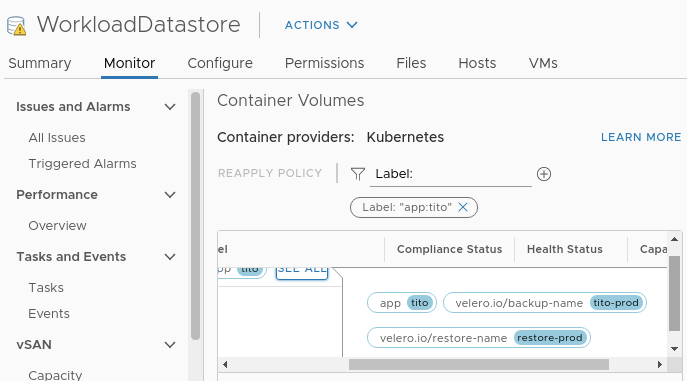

Let´s verify that our volume is visible in the vSphere UI as well. We can see that Velero has applied additional labels to the volume:

Last but not least let´s verify the functionality of our application and the entries in the database:

Wooohoooo! We´re seeing that we successfully restored our K8s application leveraging Tanzu Mission control to a TKG Cluster running on VMware Cloud. The S3-Bucket is located in the connected VPC of our VMConAWS SDDC.

This post already got longer than planned but it is still only scratching the surface of what´s possible when combining TMC and VMware Cloud.

In a Multi Cloud world security and governance are becoming more important than ever before. Tanzu Mission Control helps to simplify provisioning, lifecycle and to apply security and governance policies to your K8s Clusters - no matter if they already exist or just need to be provisioned On Prem or in the Cloud.

Thanks for reading and drop me a message if you have suggestions or remarks :-).

Useful Links: